Let’s compute the Cosine similarity between two text document and observe how it works. The Cosine Similarity is a better metric than Euclidean distance because if the two text document far apart by Euclidean distance, there are still chances that they are close to each other in terms of their context. doc_1 = "Data is the oil of the digital economy"

#NUMPY COSINE SIMILARITY HOW TO#

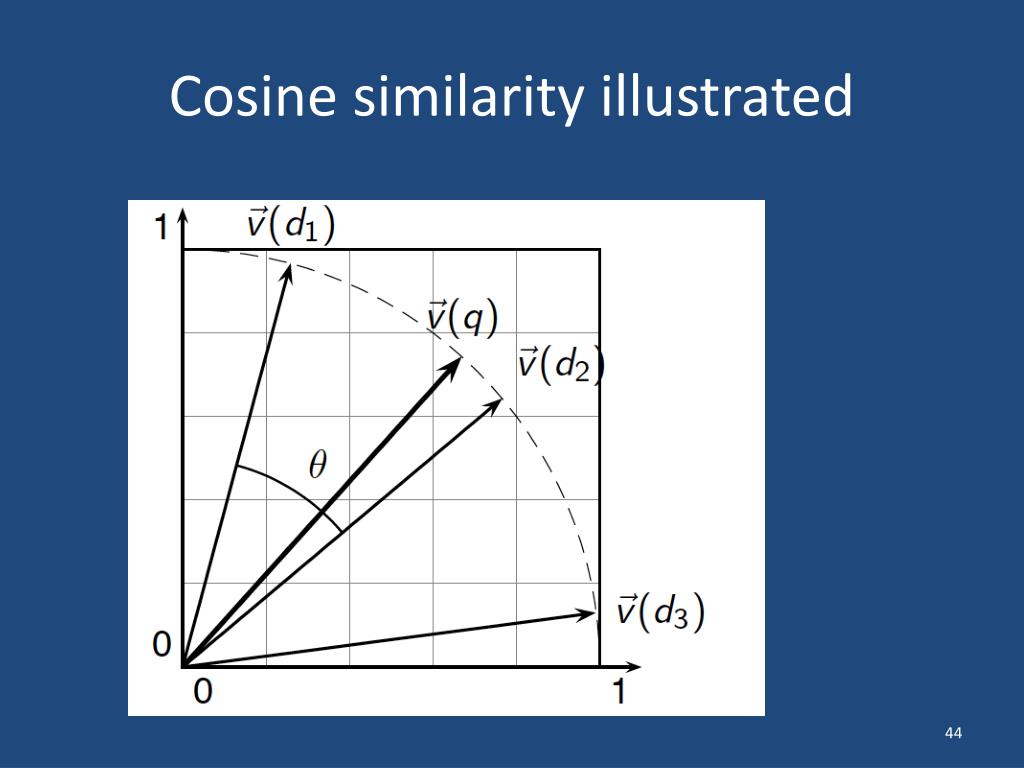

Let’s see the example of how to calculate the cosine similarity between two text document. The mathematical equation of Cosine similarity between two non-zero vectors is: The value closer to 0 indicates that the two documents have less similarity. If the Cosine similarity score is 1, it means two vectors have the same orientation. The Cosine similarity of two documents will range from 0 to 1. Mathematically, Cosine similarity metric measures the cosine of the angle between two n-dimensional vectors projected in a multi-dimensional space. The text documents are represented in n-dimensional vector space. A word is represented into a vector form. Please refer to this tutorial to explore the Jaccard Similarity.Ĭosine similarity is one of the metric to measure the text-similarity between two documents irrespective of their size in Natural language Processing. You will also get to understand the mathematics behind the cosine similarity metric with example. In this tutorial, you will discover the Cosine similarity metric with example. All these metrics have their own specification to measure the similarity between two queries. There are various text similarity metric exist such as Cosine similarity, Euclidean distance and Jaccard Similarity. I’m also happy to cover more topics like this in the future, let me know if you’re interested in learning more about a certain function, method, or concept.Text Similarity has to determine how the two text documents close to each other in terms of their context or meaning. Or if you want to learn more about Natural Language Process you can check out my article on Sentiment Analysis. If you’re interested in learning more about cosine similarity I HIGHLY recommend this youtube video. Hopefully, you can take some of the concepts from these examples and make your own cool projects!

#NUMPY COSINE SIMILARITY CODE#

As data scientists, we can sometimes forget the importance of math so I believe it’s always good to learn some of the theory and understand how and why our code works. I hope this article has been a good introduction to cosine similarity and a couple of ways you can use it to compare data. Whether you’re trying to build a face detection algorithm or a model that accurately sorts dog images from frog images, cosine similarity is a handy calculation that can really improve your results! It’s also possible to use HSL values instead of RGB or to convert images to black and white and then compare - these could yield better results depending on the type of images you’re working with. Just like with the text example, you can determine what the cutoff is for something to be “similar enough” which makes cosine similarity great for clustering and other sorting methods. 30% is still relatively high but that is likely the result of them both having large dark areas in the top left along with some small color similarities. Just as expected, these two images are significantly less similar than the first two. Here are the two pictures of dogs I’ll be comparing: This process is pretty easy thanks to PIL and Numpy! For this example, I’ll compare two pictures of dogs and then compare a dog with a frog to show the score differences. Luckily we don’t have to do all the NLP stuff, we just need to upload the image and convert it to an array of RGB values. You can probably guess that this process is very similar to the one above. There are also other methods of determining text similarity like Jaccard’s Index which is handy because it doesn’t take duplicate words into account.

Cosine Similarity is incredibly useful for analyzing text - as a data scientist, you can choose what % is considered too similar or not similar enough and see how that cutoff affects your results.

But, when you have 50% similarity in 1000-word documents you might be dealing with plagiarism. If two 50-word documents are 50% similar it’s likely because half of the words are “the”, “to”, “a”, etc. This process can easily get scaled up for larger documents which should create less room for statistical error in the calculation. These two documents are only 7.6% similar which makes sense because the word “Bee” doesn’t even appear once in the third document.

0 kommentar(er)

0 kommentar(er)